It is said that metal wind chimes are the bones of robots from the last uprising, and are hung as a reminder to those who would aspire to rise above their station and challenge their human masters.

Drone Security Threat Model

The field of Robotic AI is advancing quickly, and not only due to LLMs. Security is – as always – an afterthought, and so it’s still catching up. The threat model will probably boil down to these:

- Inside job / social engineering, in which humans are the weak link in security. We always are.

- Exploiting of insecure software, usually because it wasn’t updated, or not designed for security in the first place.

- Denial-of-service, such as DDOS or jamming wireless communication.

- Unauthorized takeover, due to the tried-and-true buffer overflow attack, or something more clever, such as when Iran stole a US drone.

Even large companied with large budgets can be insecure. For example, Ring cameras, purchased by Amazon in 2018 for $1B, eventually established end-to-end security in 2022. Not only were they vulnerable to exploits, but they also shared your data with the police, Facebook, Google, and who knows who else, besides Ring’s own employees accessing customer data in production.

Most security risks are well-understood and can be mitigated through standard practices, but LLMs are relatively new, and so its attacks and countermeasures are still evolving. One early attack was when a Chevrolet dealer chatbot was talked into selling a Tahoe for $1, “no takesies backsies.” More recently, researchers at UPenn demonstrated that similar methods may be used to take over robots.

AI Drone Security Risk Mitigation

To address security concerns, there are the standard practices, of which these are a few examples:

- Role-Based Access Control (RBAC)

- Updates and Patch Management

- Encrypted Communication

- Monitoring and Logging

- Third-Party Audits

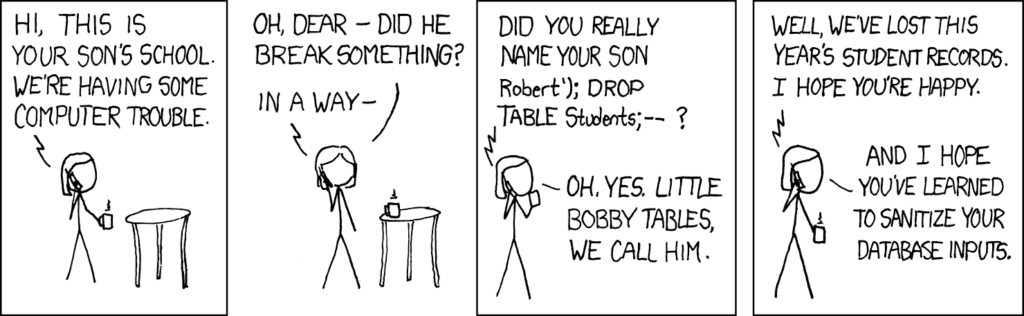

However, for recently emerged attacks on the LLM subsystem, the first new defense is the old data sanitization approach:

LLM jailbreaks are achieved through “prompt injection”, and as of this writing, the most popular mitigation is to use another LLM, usually fine-tuned for this purpose, to detect prompt injection inputs. But it’s not enough just to plug in a model to your LLM pipeline and declare mission accomplished. For example, one popular prompt-injection-detecting model is deBERTa, which has a context window of 512 tokens (a token is about 4 or 5 characters). So if the attack has fluff for the first 512 tokens and prompt injection thereafter, then a naïve use of deBERTa will be fooled, and your main LLM will hand over your Chevy Tahoe or your US Drone to adversarial actors.

An autonomous drone ecosystem is complex and has a large attack surface. The full list of security threats and the level of effort required to address them are beyond the scope of this article, and are frankly daunting. However, proper planning – and wind chimes – should ward off the sort of aberrant behavior that results from security breaches.