Servos and data and finite automata, that’s what AI drones are made of.

You can build a drone for $12 in parts. It doesn’t do anything except fly around, and even that not very well. In fact it will dash itself, and your hopes of inexpensive flight, to pieces almost immediately. The reason is data.

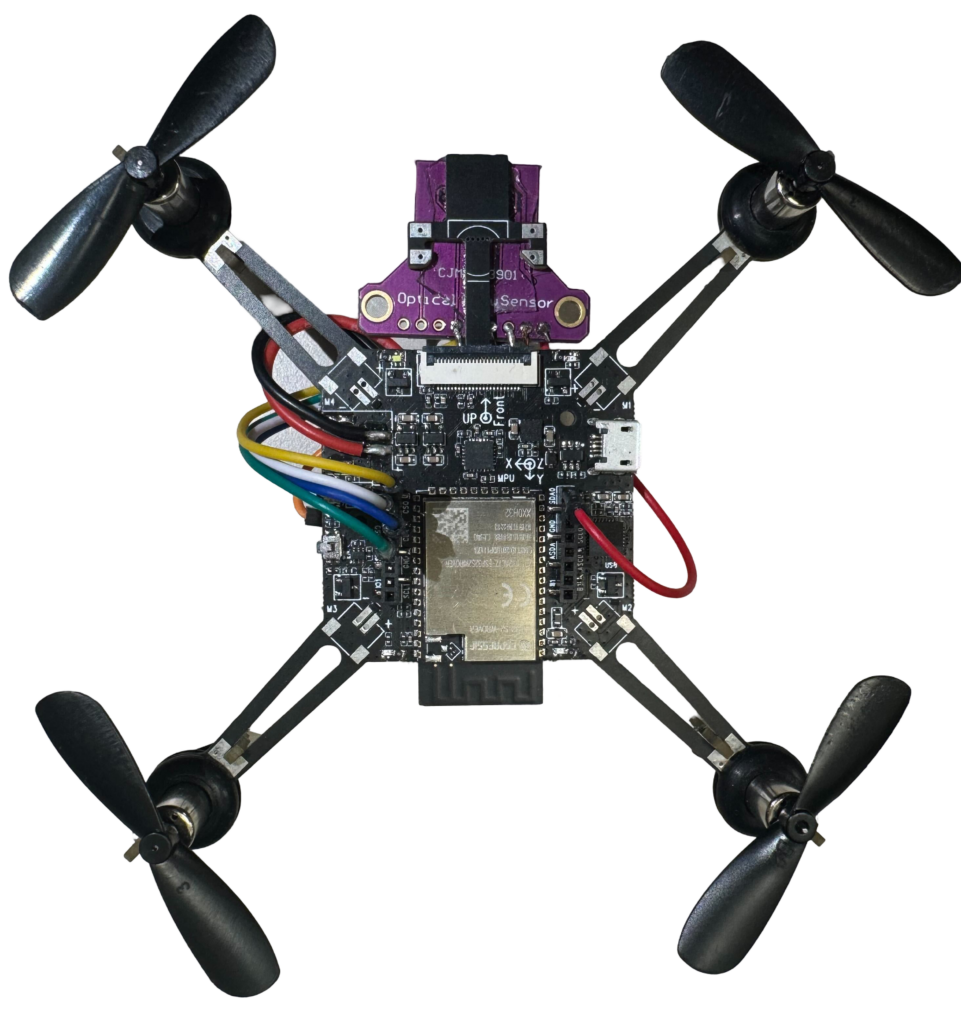

To make a stable remote-control drone, you will need — besides the battery and spinny bits — a variety of sensors, and the processing power to digest the incoming data. Commonly used sensors are:

1. Accelerometer.

2. Gyroscope.

3. Magnetometer (a compass).

These three are often grouped into a module called an internal measurement unit (IMU).

4. Optical Flow Sensor, a camera module used for stabilization. It doesn’t output images, just 3D position data and a quality metric.

Drones usually have a flight controller that contains an integrated IMU. The flight controller has its own processor and memory and is the central hub of the drone. It runs software such as PX4 or Ardupilot, which enables it to process the sensor data. It then uses this processed sensor data to control the electronic speed controller (ESC) to drive the motors and stabilize the drone.

Now your more-than-$12 drone has a chance at stable flight, assuming you put it all together correctly. However, at this point you still need a human pilot. What if you want a drone that flies itself, an autonomous drone? Then you will need more sensors, more software, and more processing power, depending on the environment and use case:

5. LiDAR pointing down, to know with precision how far the ground is, for not-crash landings.

6. Range finder pointing forward, for collision, or obstacle, avoidance.

The difference between LiDAR and range finder is that while both use lasers to measure distance, the former is more precise and expensive.

The difference between collision avoidance and obstacle avoidance is that the former means “stop before colliding”, and the latter means “fly around the obstacle and keep going”, which is much harder to implement, as it involves path planning and decision making.

7. Camera for computer vision. Some drones use two “stereo” cameras pointed forward, for depth perception. Some drones have cameras pointed in other directions, such as downward to assist with precision landing or delivery.

8. GPS, for outdoor flight of any appreciable distance. If GPS is not available, as with some indoor locations, then you will use other sensors for simultaneous localization and mapping (SLAM).

9. WiFi and/or LTE to receive commands and send back video, telemetry, and other information.

10. A companion computer to handle the autonomous requirements, such as video compression, real-time communication, and software that decides what commands to send to the flight controller now that you don’t have a human pilot with a remote control.

11. Software in the cloud to extend the drone’s capabilities, for example:

- Operation and instrumentation, so that the human operator sees where the drone is and what it’s doing, and can give it instructions.

- LLMs, computer vision, and other AI more powerful than what a companion computer can support, but with higher latency.

- Access to other cloud services, such as a maps API, or knowing how to avoid restricted airspace.

At this point, your really-not-$12 drone is fairly bristling with sensors, and is processing a torrent of data. It can fly itself around send pictures back, too.

However, what if you wanted a single human operator to direct a fleet of heterogeneous drones to achieve objectives? That level of reasoning and multitasking requires LLM agents. The human expresses a wish in natural language, which is processed in the cloud and translated to first subtasks and then commands to the individual flight controllers.

With autonomous fleets, we have moved from a drone with a little software to a complex software system, heavy on data science, with drone hardware at the edge. It requires security, scalability, availability, compliance, and interoperability with third-party hardware and software.

The autonomous drone market is nascent. The drone industry is still mostly proprietary drone hardware manufacturers for human pilots. Even current drone legislation expects a licensed pilot to control only one drone, and within line of sight. To guess what the market will demand, and what ongoing research will make possible, feels like writing science fiction: Which drones will be popular: quadcopter, fixed-wing, rolling, walking? Will they communicate with each other when working together to accomplish tasks? Will we train custom LLMs on inter-drone communication and reasoning, as opposed to current human-centric LLMs? It is said that the rise of the automobile led to the decline of hats; who knows what cultural shifts autonomous drones will bring?